Thunderforge Unveils AI Agents for Wargames

The Defense Innovation Unit and the Development of Thunderforge

The Defense Innovation Unit (DIU), part of the U.S. Department of Defense (DOD), is spearheading an experimental initiative known as Thunderforge. This project aims to develop a custom agentic AI system that employs multiple digital "agents" to critique war plans across various military domains. These agents work in parallel, analyzing different aspects of military strategies and identifying potential weaknesses that might be overlooked by human planners.

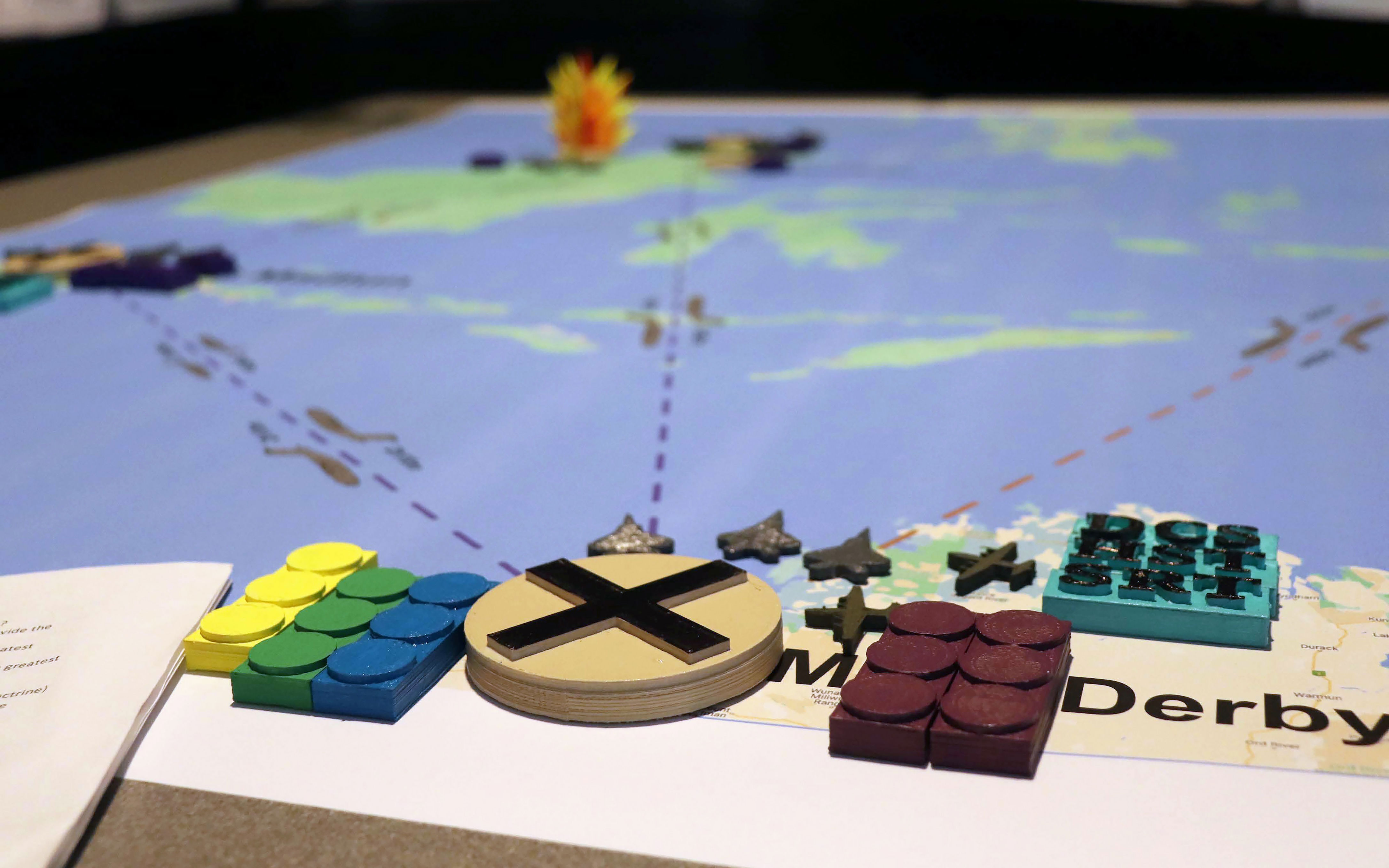

Thunderforge's capabilities were tested during a tabletop exercise conducted by the U.S. Indo-Pacific Command (INDOPACOM) in June. The project was initially announced in March and is currently in its early stages. The initial rollout will target commanders and planners within INDOPACOM and its sister command in Europe (EUCOM). Eventually, the system will access internal databases, run DoD-grade simulations, and integrate with existing software such as DARPA’s SAFE-SiM modeling and simulation architecture. This integration will allow the creation of numerous realistic and plausible military scenarios for analysis.

California-based Scale AI is leading the project, with Microsoft providing its large language model (LLM) technology and Anduril contributing to the modeling efforts. According to Scale AI, their system is designed to coordinate multiple custom agents, each utilizing a range of models and acting as a digital staff officer to assist in synthesizing data for mission-critical planning activities.

"These agents dynamically collaborate, fusing separate analyses into a more comprehensive view for operational planners to consider," says Dan Tadross, the head of public sector at Scale AI. "This approach is designed to shift the role of the operator from being 'in the loop'—micromanaging a single process—to being 'on the loop,' where they can apply their strategic judgment to the options generated."

Enhancing AI in Military Planning

The DIU has divided Thunderforge’s development into two tracks, starting with augmenting the cognitive plan-writing process through red-teaming. The system will submit a human-authored plan to a team of AI agents to offer perspectives across multiple domains—including logistics, intelligence, and cyber and information operations.

"You can really customize it however you want," says Bryce Goodman, the DIU’s chief strategist for AI. The second track will link with DOD’s most advanced modeling software to conduct modeling simulations, generate and analyze outputs, and interpret the results. Even for humans, building simulations and understanding the raw results requires extensive training in a specialized skillset. Goodman says, “Where that becomes really powerful is that now, the LLMs are calling on tools the DOD has built and validated, and suddenly you’re combining the brute pattern recognition that AI does with physics-based simulations or other logical reasoning tools that perform similar tasks. It’s scaffolding in all those capabilities.”

With track one underway, the DIU has already developed a minimum viable product, though it requires deeper integration with classified data systems and separate government partnerships. As the “plumbing” gets built, Goodman says his team hopes to have the system validating existing scenarios by the end of this year, and then writing scenarios from scratch sometime in 2026.

AI’s Role in Conflict Simulation

Conflict simulation has long been a fixture of operational planning and training, with wargame participants mapping out battlefield movements on sand tables. In the 1970s, Lawrence Livermore National Laboratory introduced Janus, the world’s first player-interactive combat simulator operating in near-real-time. It was a key planning tool for the United States’ 1989 invasion of Panama and, later, Operation Desert Storm.

Today’s agentic AI technology goes further with autonomous, multi-stream data processing, says political scientist Stephen Worman, director of the RAND Center for Gaming, which specializes in wargames and strategic simulations for the U.S. military. “These capabilities can improve situational awareness, speed threat modeling iterations, and streamline re-supply or pre-deployment planning.” That said, the downsides warrant caution, Worman adds, citing a Texas National Security Review paper written by Jon Lindsay, an associate professor at the Georgia Institute of Technology specializing in cybersecurity, privacy, and international affairs. “He underscores that AI excels in structured, bounded, low-stakes domains with routine data, but military operations are often messy, chaotic, and rare events,” says Worman. “There’s a premium on getting it right, and failure can be catastrophic.”

Without formal frameworks to interpret why AI agents make decisions or how much trust to place in their reasoning, the resulting opacity creates a false sense of precision, Worman says, adding, “An agent might perform plausibly in most situations but it might simply be amplifying biases or exploiting flaws in the underlying model.”

Goodman admits the project faces significant research challenges. LLMs sometimes confidently hallucinate answers. An LLM might present a convincing plan that, on closer inspection, sends a warship plowing through Australia. Goodman notes this kind of output seems thoughtful until you interrogate the underlying reasoning and find no real logical coherence. “My baseline assumption is that LLMs are going to hallucinate and be flawed and opaque, and we’re not going to understand all their failure modes,” Goodman says. “That’s why it’s more about understanding the user’s context. If my goal in generating an output is to spur thought for me, but not get a finished product, then the hallucinations are less of a concern. That’s why we’re starting with critiquing human plans.”

Hallucinations can also be diminished by allowing an agent to call upon external tools. For example, instead of guessing how many tanks are deployable in a zone, the AI agent queries a database for the latest information. Scale AI cites additional agentic safeguards like tracing for true explainability, which allows an operator to see the specific chain of evidence and reasoning that led to a conclusion. “Continuous adversarial testing to actively probe for hidden biases, vulnerabilities, or potential for misalignment is a powerful tool before agentic deployment,” Tadross says. “Where applicable, formal verification methods provide a way to mathematically prove that an agent’s behavior will remain within pre-defined, acceptable bounds.”

Evaluating AI in Foreign Policy

Scale AI and the Center for Strategic and International Studies developed a Critical Foreign Policy Decisions Benchmark to understand how leading LLMs responded to 400 expert-crafted diplomatic and military scenarios. Models like Qwen2 72B, DeepSeek V3, and Llama 8B Instruct showed a tendency toward escalatory recommendations, while GPT-4o and Claude 3.5 Sonnet were significantly more restrained. The study also found varying degrees of country-specific bias across all models, often advising less interventionist or escalatory responses toward Russia and China than the United States or the United Kingdom.

“It depends which model you pick. Not all models are trained on the same data, and their model architectures aren’t always the same,” says Yasir Atalan, a data fellow at the Center for Strategic and International Studies who co-authored the benchmarking analysis. “You can train and fine-tune a model, but its baseline preconditions and inclinations can still be different from others. That’s where we need more experimentation. I think Thunderforge can give us a picture of whether these models are complementing, or to what extent they can achieve these goals or fail.”

A separate but similar line of inquiry comes from Jacquelyn Schneider, a Hoover Institution fellow and professor at the Naval Postgraduate School. Last year, Schneider and colleagues at Stanford University evaluated five off-the-shelf LLMs in military and diplomatic decision-making. All models exhibited hard-to-predict escalation patterns. “I expected to see a lot more divergence between LLMs,” Schneider says. “For me, the tendency toward escalation is a puzzle. Is it because the corpus of knowledge focuses only on escalation? De-escalation is hard to study since it never happens.”

Schneider cautioned that even tightly scoped AI systems can have unintended strategic effects if commanders trust outputs without fully understanding how they were produced. She says it’s important to train users to build campaigns without the software, or to spot underlying problems that could hinder decision-making during combat. “For the last 20 years, the U.S. has built campaign plans for the global War on Terror without our information structures constantly being targeted as they would in a scenario against a competent adversary. Some data might be manipulated on a day-to-day basis due to electromagnetic interference or cyberattacks, but the scope and scale increases exponentially in wartime, when the software becomes a target. How the system works in a peacetime environment represents the best possible situation, but how it degrades in a combat environment could be the standard of how closely it becomes a part of combat planning processes.”

Thunderforge’s creators and outside experts both emphasize the importance of human oversight. Tadross says Scale AI’s role is to provide the assets that military personnel need to deter conflicts or gain winning advantages if forced to fight. The DoD ultimately defines the doctrine, rules of engagement, and appropriate level of human oversight for any given mission. “While the system can be tasked to generate and assess courses of action based on those defined parameters, the ultimate decision-making authority always rests with the human commander,” Tadross says. “Our responsibility is to ensure the technology provides clear, understandable, and trustworthy support that empowers their judgment and accelerates their decision-making at the speed of relevance.”